The Rise of Private Generative AI and the Need for Enterprise Tools

As the potential of Generative AI becomes increasingly apparent, more and more organizations are looking to harness its power for their specific use cases. However, deploying AI models in a private, secure, and compliant manner presents significant challenges.

While public AI models like OpenAI and Anthropic have demonstrated remarkable capabilities, many organizations require the ability to leverage private and often sensitive data sources and deploy them in a controlled environment. This is especially critical for industries with strict data privacy and compliance requirements, such as healthcare, FSI and Government. Private Generative AI allows companies to leverage the power of AI while ensuring that sensitive data remains secure and confidential.

There are countless “toy” demos and examples for building Retrieval Augmented Databricks (RAG) systems but rely on bolting multiple services together and leveraging public AI services. Other demos that I have seen that use self hosted models are really at best “science experiments” that are complicated to setup, deploy and near impossible to manage and monitor in production (if the app ever makes it to production).

Databricks: A Comprehensive Platform for Private Generative AI

This is where platforms like Databricks are stepping up to provide a comprehensive suite of tools to streamline the deployment, monitoring, and management of private Generative AI applications.

I came across the Databricks solution after they released there DBRX open source model. After lots of research and experimenting I really think Databricks is the perfect solution for Enterprises looking to take there first steps into the world of GEN AI or who are looking for a more enterprise ready turn key solution.

Key features of the platform include:

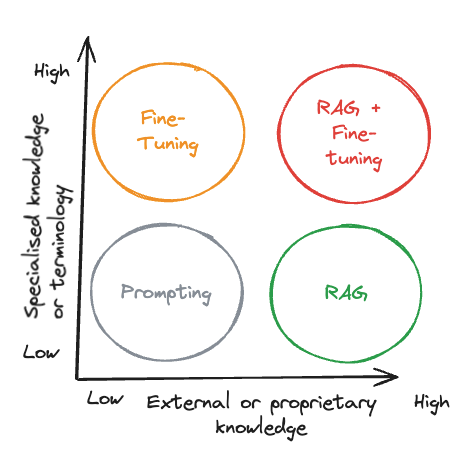

- Vector Search: Store and leverage private/sensitive data through embeddings search to enable RAG based applications. Fully managed and integrated with Unity Catalog and Model Serving.

- Fine-tuning in AutoML: Brings a low-code approach to fine-tuning LLMs securely using enterprise data, with the resulting model owned by the customer.

- Curated open source models: A list of models like the newly released DBRX, MPT-7B, Falcon-7B, and Stable Diffusion, optimized for performance and cost on Databricks Model Serving.

- MLflow 2.5: Introduces MLflow AI Gateway and MLflow Prompt Tools for comparing model outputs and deploying to production1.

- Databricks Model Serving: Optimized for LLM inference with up to 10x lower latency and reduced costs.

- Databricks Lakehouse Monitoring: Provides end-to-end visibility into data pipelines for monitoring, tuning, and improving performance, leveraging Unity Catalog for lineage insights.

These innovations aim to unify the data and AI platform, allowing organizations to develop generative AI solutions faster by bringing together data, models, LLMOps, monitoring, and governance on the Databricks Lakehouse Platform

Getting Started with Databricks

If you’re new to Databricks and want to explore its capabilities for private Generative AI I am going go to do future posts on the following:

- Set up a Databricks trial environment

- Configure the environment, including creating a cluster, external storage and code fixes for issue I ran into and resolved.

- Explore the demos and tutorials provided by Databricks to showcase their Generative AI capabilities.

Stay tuned!

Leave a comment